To download and activate the API in LM Studio for seamless communication with your software, follow these detailed steps:

Download LM Studio: Visit the LM Studio website and download the version compatible with your operating system (Mac, Windows, or Linux).

Install LM Studio: Once the download is complete, install LM Studio on your machine by following the installation instructions provided.

Open LM Studio: Launch LM Studio on your computer.

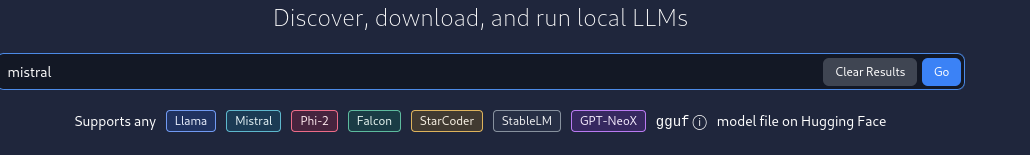

Search for AI Models: LM Studio offers a search tab where you can explore the vast library of AI models available on platforms like Hugging Face. Use the search feature to find the specific model you need for your software.

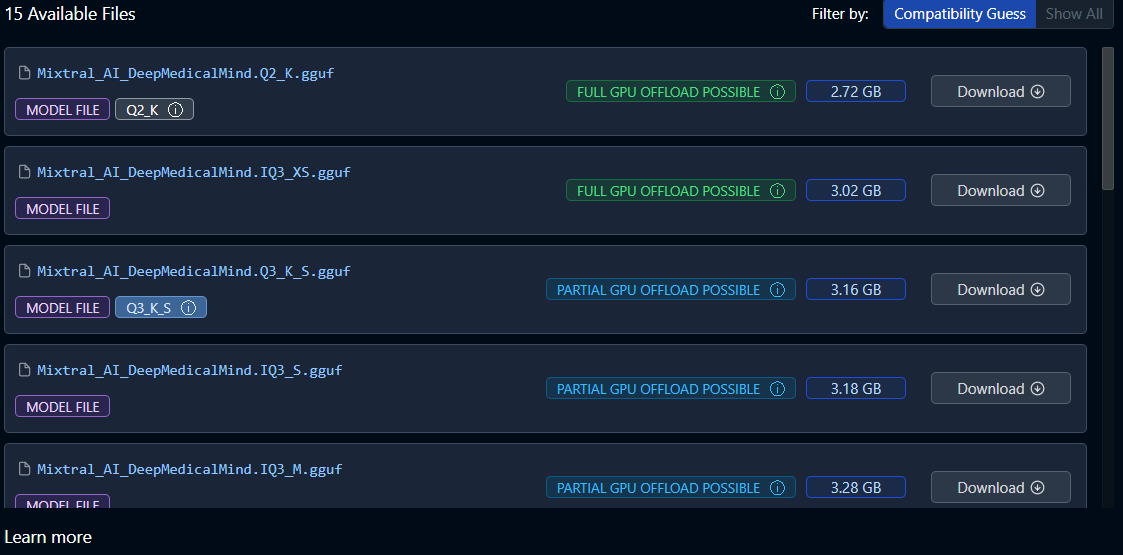

Check GPU Compatibility: Before downloading the model, LM Studio allows you to check if the model can utilize GPU power at 100%. This ensures optimal performance when running the model on your machine.

Download Model: Once you've found the desired model and confirmed its compatibility with your GPU, download the model files directly from Hugging Face repositories using LM Studio.

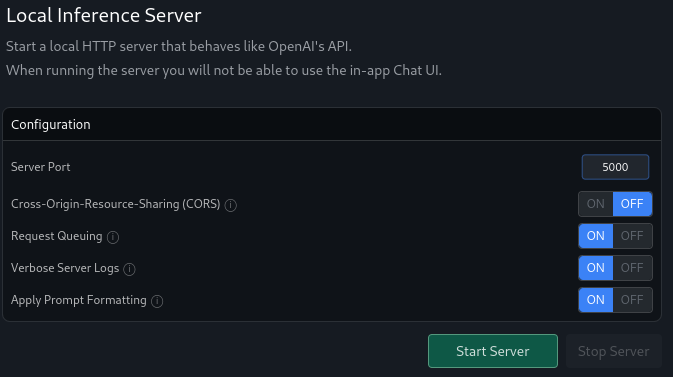

Activate API: To activate the API for your software, navigate to your software's main window and locate the "OpenAI API" button. Click on this button to access the API settings.

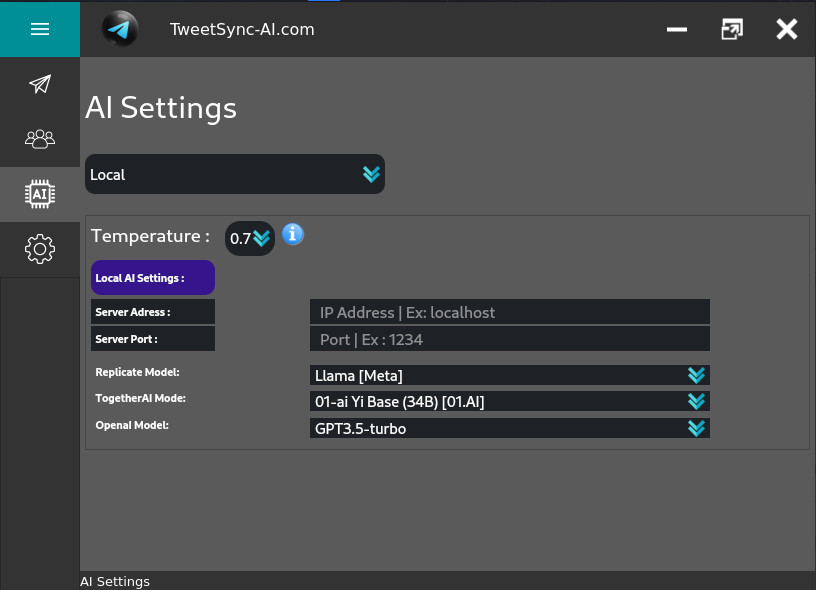

Switch to Local API: In the API settings window, you'll see an option to switch to a Local API. Click on this option and enter "localhost" as the IP address and "port" into the designated input fields.

One-Click Activation: LM Studio streamlines the process by allowing you to activate the API with just one click. Once you've entered the required information, click on the "Start Server" button to finalize the setup.

Start the action: With the API activated, our software can now send the request to your LM Studio model.

By following these detailed steps, you can easily download and activate the API in LM Studio, empowering your software with advanced AI capabilities for enhanced functionality and performance.

Ollama Software:

Download Ollama: Visit the Ollama website and download the appropriate version for your operating system.

Install and Open Ollama: Install the software following the on-screen instructions and launch it.

Choose and Load Model: Use the interface to select the desired AI model. Load the model to make it available for local use.

Start API Server: Navigate to the API settings and select the option to start the server. Enter the desired port number to make the API accessible.

Jan Software:

Download Jan: Access the Jan website to download the software for your system.

Install and Launch Jan: Follow the installation guide to set up Jan on your device and start the application.

Select and Load Model: Browse through the available models, choose one, and load it for local use.

Initiate API Server: Open the API configuration settings, select the port, and start the server to activate the local API.

LocalAI:

Download LocalAI: Head to the LocalAI website and download the software compatible with your platform.

Install and Open LocalAI: Install the software by following the provided instructions and open it on your machine.

Select and Load Model: Find and select the desired AI model, then load it into LocalAI.

Start API Server: Go to the API settings, choose a port, and start the server to enable the local API for use with your software.

For detailed video guides on how to install and use these alternative methods, visit the Videos section.